Unleash the Power of Clean Data: A Comprehensive Guide to Data Cleansing Techniques

Clean data is the foundation of accurate analysis and informed decision-making. This guide provides eight essential data cleansing techniques to transform your raw data into actionable insights. Learn how to tackle common data quality issues, from duplicate entries and missing values to inconsistent formatting and outliers. Each technique includes practical, step-by-step examples using Excel and AI-powered Excel features, along with specific use cases to demonstrate their application in various business scenarios.

This listicle is invaluable for professionals who regularly work with data, including:

- Project managers

- Financial analysts

- Marketing managers

- Operations managers

- Sales teams

Mastering these data cleansing techniques will empower you to:

- Improve the accuracy of reports and dashboards

- Make better, data-driven decisions

- Increase efficiency by automating data cleaning tasks

- Enhance the reliability of your data analysis

By the end of this guide, you will have a practical understanding of essential data cleansing techniques, including duplicate detection, data validation, missing value imputation, outlier treatment, data standardization, data profiling, text mining cleansing, and record linkage, and how to apply them using Excel. We'll cover these techniques in detail, providing clear, concise explanations and actionable steps to implement them immediately. This comprehensive approach will empower you to confidently tackle your data challenges and unlock the true potential within your data sets.

1. Duplicate Detection and Removal

Duplicate data is a common problem that can significantly impact data quality, leading to inaccurate analysis and flawed decision-making. Duplicate detection and removal, a fundamental data cleansing technique, addresses this by identifying and eliminating redundant records within datasets. This process enhances data accuracy, improves efficiency, and ensures data integrity.

This technique is crucial for various data cleansing tasks, from simple list cleaning to complex database deduplication. It involves comparing records across multiple fields to uncover exact matches, near-duplicates, or fuzzy matches. These matches are identified using diverse algorithms and similarity measures, tailored to the specific dataset and its characteristics.

Examples of Duplicate Detection and Removal

- Netflix: Improves recommendation accuracy by removing duplicate user profiles. This ensures personalized recommendations are not skewed by redundant data.

- Banking Institutions: Eliminates duplicate customer records for KYC (Know Your Customer) compliance. This helps prevent fraud and ensures accurate customer identification.

- E-commerce Platforms: Deduplicates product catalogs from multiple suppliers. This provides a clean and consistent product listing for customers.

Actionable Tips for Effective Deduplication

- Start Simple: Begin with exact match detection before progressing to more complex fuzzy matching techniques. This helps identify the most obvious duplicates quickly.

- Reduce Complexity: Use blocking techniques to narrow down the comparison scope, reducing computational complexity and improving efficiency.

- Human Oversight: Implement human-in-the-loop validation for uncertain matches. This ensures accuracy and prevents accidental deletion of valuable data.

- Business Rules: Consider specific business rules when defining what constitutes a duplicate. This context-specific approach avoids unnecessary data loss.

When and Why to Use Duplicate Detection and Removal

This data cleansing technique is essential whenever data redundancy poses a threat to data quality and analytical accuracy. It is particularly valuable when:

- Improving data accuracy for reporting and analysis: Eliminating duplicates ensures accurate insights from your data.

- Enhancing the efficiency of data processing: Fewer records translate to faster processing times.

- Maintaining data integrity and consistency: Deduplication prevents inconsistencies caused by redundant information.

- Complying with regulatory requirements: Accurate and unique data is crucial for compliance in various industries.

By implementing robust duplicate detection and removal procedures, organizations can significantly improve the quality and reliability of their data, leading to better business decisions and enhanced operational efficiency. This makes it a critical component of any comprehensive data cleansing strategy.

2. Data Validation and Constraint Checking

Data validation and constraint checking is a systematic approach to ensuring data meets predefined business rules, format requirements, and logical constraints. This technique validates data against established schemas, business logic, and referential integrity rules to maintain data consistency and reliability. This process improves data quality by preventing inconsistencies and errors, ensuring that data is accurate, complete, and conforms to predefined standards.

This technique is crucial for maintaining data integrity across various applications and systems. It involves implementing rules and checks at different stages of the data lifecycle, from initial input to storage and processing. These checks can range from simple data type validation (e.g., ensuring a field contains only numbers) to complex business rule enforcement (e.g., verifying that a customer's credit limit is not exceeded). Learn more about Data Validation and Constraint Checking.

Examples of Data Validation and Constraint Checking

- Financial Institutions: Validating transaction amounts against available account balances to prevent overdrafts and ensure accurate financial records.

- Healthcare Systems: Ensuring patient data adheres to HIPAA compliance standards, safeguarding sensitive information and maintaining data integrity.

- Government Agencies: Validating citizen data against official documentation, ensuring accuracy and preventing fraud.

Actionable Tips for Effective Validation

- Implement validation rules in multiple layers: Apply checks at data entry, processing, and storage stages to catch errors early and prevent propagation of inconsistencies.

- Use configurable rule engines: Employ rule engines to manage and modify validation rules easily without requiring code changes.

- Provide clear error messages: Offer informative error messages that guide users towards correcting invalid data, improving user experience and data quality.

- Balance strictness with business needs: Avoid overly restrictive validation rules that may hinder legitimate data entry while maintaining necessary controls.

When and Why to Use Data Validation and Constraint Checking

Data validation is essential whenever data accuracy and consistency are paramount. This technique is particularly valuable when:

- Ensuring data integrity for regulatory compliance: Meeting industry and government regulations through consistent data validation practices.

- Improving the reliability of data for decision-making: Accurate and consistent data enables informed decisions.

- Preventing errors and inconsistencies in downstream processes: Validated data reduces errors in subsequent data processing and analysis.

- Improving data quality for reporting and analytics: Consistent data leads to reliable reports and insightful analysis.

By implementing comprehensive data validation and constraint checking procedures, organizations can significantly enhance the quality, reliability, and trustworthiness of their data. This leads to better decision-making, improved operational efficiency, and reduced risks associated with data errors and inconsistencies.

3. Missing Value Imputation

Missing data is a pervasive issue in datasets, potentially skewing analyses and hindering accurate insights. Missing value imputation, a crucial data cleansing technique, addresses this by filling in missing values using statistical and algorithmic approaches. This maintains dataset completeness for robust analysis while preserving statistical validity through sophisticated estimation methods.

This technique employs various methods, ranging from simple mean/median imputation to advanced techniques like regression imputation and k-nearest neighbors. Choosing the right method depends on the nature of the missing data, the dataset's characteristics, and the analytical goals. Accurate imputation minimizes bias and ensures the reliability of subsequent data analysis.

Examples of Missing Value Imputation

- Clinical Trials: Imputing missing patient measurements is vital for FDA submissions, allowing for comprehensive data analysis even with incomplete patient records.

- Market Research: Filling missing survey responses enables accurate demographic analysis and market segmentation, providing valuable insights for marketing strategies.

- IoT Sensor Networks: Interpolating missing readings from sensor networks ensures continuous environmental monitoring and facilitates timely responses to environmental changes.

Actionable Tips for Effective Imputation

- Analyze Missing Data Patterns: Understand the reasons for missing data (Missing Completely at Random, Missing at Random, or Missing Not at Random) before selecting an imputation method. This informed approach minimizes bias.

- Multiple Imputation: For critical analyses, use multiple imputation to generate several imputed datasets, capturing the uncertainty associated with the imputation process. This provides more robust and reliable results.

- Cross-Validation: Validate the quality of imputation by using cross-validation techniques. This helps assess the imputation method's performance and identify potential issues.

- Documentation: Document the chosen imputation method, parameters, and rationale. This ensures reproducibility, transparency, and facilitates future audits and analyses.

When and Why to Use Missing Value Imputation

Missing value imputation is essential when incomplete data hinders analysis and potentially leads to biased conclusions. This technique is particularly valuable when:

- Preserving Statistical Power: Maintaining a complete dataset ensures sufficient statistical power for meaningful analysis.

- Minimizing Bias: Accurate imputation reduces bias caused by missing data, leading to more reliable insights.

- Enabling Comprehensive Analysis: A complete dataset allows for a more comprehensive exploration of the data and facilitates a wider range of statistical techniques.

- Improving Model Performance: In predictive modeling, imputation can improve model accuracy and robustness by providing complete input data.

By implementing appropriate missing value imputation techniques, organizations can effectively handle incomplete data, ensuring the accuracy and reliability of their analyses. This ultimately leads to more informed decision-making and improved business outcomes, solidifying its place as a vital data cleansing technique.

4. Outlier Detection and Treatment

Outlier detection and treatment is a crucial data cleansing technique that focuses on identifying and handling data points that deviate significantly from the normal pattern or distribution. These anomalous values can arise from various sources, including errors in data entry, measurement inaccuracies, or genuinely exceptional cases. Addressing outliers is essential for ensuring the accuracy and reliability of data analysis and modeling.

This technique employs statistical methods and machine learning algorithms to pinpoint outliers within datasets. These methods range from simple visualizations like box plots and scatter plots to more sophisticated techniques like clustering and regression analysis. Learn more about Outlier Detection and Treatment for a deeper dive into the topic. Identifying these outliers allows for informed decisions on how to handle them, whether it's correcting errors, investigating unusual events, or removing them from the dataset altogether.

Examples of Outlier Detection and Treatment

- Credit Card Companies: Detecting potentially fraudulent transactions by identifying spending patterns that deviate significantly from a customer's usual behavior.

- Manufacturing Quality Control: Pinpointing defective products by analyzing measurements that fall outside acceptable tolerances.

- Healthcare Monitoring: Identifying abnormal patient vital signs, enabling timely intervention and improved patient care.

Actionable Tips for Effective Outlier Handling

- Combine Methods: Utilize multiple outlier detection methods for a more comprehensive and robust identification process.

- Investigate, Don't Just Delete: Thoroughly investigate outliers before automatically removing them, as they may represent valuable insights.

- Domain Expertise: Incorporate domain expertise when setting thresholds for outlier detection, ensuring context-specific identification.

- Ensemble Methods: Consider using ensemble methods, which combine multiple models, for enhanced detection accuracy.

When and Why to Use Outlier Detection and Treatment

Outlier detection and treatment is invaluable whenever data quality and analytical accuracy are paramount. It's especially beneficial when:

- Developing Predictive Models: Removing outliers can significantly improve the accuracy and reliability of predictive models.

- Ensuring Data Integrity: Identifying and correcting errors caused by outliers maintains data integrity.

- Detecting Fraud and Anomalies: Outlier analysis helps identify unusual patterns that may indicate fraudulent activities or other anomalies.

- Improving Statistical Analysis: Removing outliers can lead to more accurate and meaningful statistical analyses.

By incorporating effective outlier detection and treatment procedures, organizations can strengthen the robustness of their data analysis, leading to more reliable insights and better informed decision-making.

5. Data Standardization and Normalization

Data standardization and normalization is a systematic process of converting data into consistent formats, units, and scales. This ensures uniformity across datasets, enabling meaningful comparisons and analysis. This technique encompasses both structural standardization (format consistency, like date formats or naming conventions) and statistical normalization (scale adjustment, like transforming values to a specific range). Implementing this technique enhances data quality, improves interoperability between different systems, and provides a solid foundation for accurate reporting and analysis.

This technique is crucial for various data cleansing tasks, from simple data entry validation to complex database integration. It involves defining clear transformation rules and applying them consistently across the dataset. These rules can be based on industry standards, internal business requirements, or statistical methods, depending on the specific data and its intended use.

Examples of Data Standardization and Normalization

- Pharmaceutical Companies: Standardizing drug names across global databases ensures consistent reporting of clinical trial results and facilitates pharmacovigilance activities.

- Financial Institutions: Normalizing currency values for international transactions allows for accurate comparisons of financial performance across different countries.

- Retail Chains: Standardizing product categories across different stores enables accurate inventory management and streamlined supply chain operations.

- Government Agencies: Standardizing address formats for postal services improves mail delivery efficiency and reduces errors.

Actionable Tips for Effective Standardization and Normalization

- Establish Clear Rules: Define precise standardization rules and document them thoroughly for future reference and maintainability.

- Industry Standards: Utilize industry-standard reference data whenever possible to ensure compatibility and interoperability.

- Validation Checks: Implement validation checks after standardization to identify any errors or inconsistencies introduced during the process.

- Reversibility: Design data transformations with reversibility in mind, especially for critical data, to allow for data recovery if necessary.

When and Why to Use Data Standardization and Normalization

Data standardization and normalization is essential when data inconsistency hinders analysis and reporting. It is particularly valuable when:

- Integrating data from multiple sources: Standardization ensures seamless data integration and eliminates format discrepancies.

- Performing comparative analysis: Normalization enables meaningful comparisons across different datasets by adjusting for scale differences.

- Building statistical models: Standardized and normalized data is often required for accurate and reliable statistical modeling.

- Improving data quality for reporting and decision-making: Consistent data formats and scales contribute to enhanced data quality and informed decision-making.

By implementing data standardization and normalization, organizations can transform raw data into a consistent and reliable resource, unlocking valuable insights and facilitating data-driven decision-making. This makes it a core component of effective data cleansing strategies, contributing significantly to improved data quality and analytical accuracy.

6. Data Profiling and Quality Assessment

Data profiling and quality assessment is a comprehensive analytical process that examines datasets to understand their structure, content, quality, and relationships. This technique provides detailed insights into data characteristics, patterns, and quality metrics to inform data cleansing strategies and ongoing data governance. It's like conducting a thorough health check on your data, revealing its strengths, weaknesses, and areas needing improvement. This process is crucial for ensuring data accuracy and reliability before any serious analysis or decision-making.

This technique helps identify various data quality issues, including inconsistencies, missing values, outliers, and invalid data entries. By understanding the nature and extent of these issues, organizations can develop targeted data cleansing techniques to address them effectively. Learn more about Data Profiling and Quality Assessment and how it empowers data-driven decisions.

Examples of Data Profiling and Quality Assessment

- Banks: Profiling customer data before regulatory reporting ensures compliance and accurate risk assessments.

- Retailers: Analyzing product data quality across multiple channels improves inventory management and customer experience.

- Healthcare Organizations: Assessing patient record completeness enhances patient safety and supports effective treatment.

- Telecommunications Companies: Evaluating network performance data helps optimize network infrastructure and service delivery.

Actionable Tips for Effective Data Profiling

- Start High-Level: Begin with a broad overview of the data before diving into granular analysis. This approach provides a general understanding of the dataset's characteristics.

- Sampling Techniques: Use sampling for initial assessments of large datasets to save time and resources while gaining valuable preliminary insights.

- Expert Review: Combine automated profiling with domain expert review to interpret the results and identify context-specific issues.

- Regular Scheduling: Create regular profiling schedules for ongoing monitoring of data quality, allowing proactive identification and remediation of emerging issues.

When and Why to Use Data Profiling and Quality Assessment

Data profiling is essential whenever data quality is critical for decision-making, analysis, or reporting. It's particularly valuable when:

- Implementing data governance initiatives: Establishing data quality standards and monitoring compliance.

- Preparing data for migration or integration: Identifying potential compatibility issues and ensuring a smooth transition.

- Developing data cleansing strategies: Understanding the nature and extent of data quality problems to inform targeted solutions.

- Supporting business intelligence and analytics: Ensuring data accuracy and reliability for informed decision-making.

By incorporating data profiling and quality assessment into your data cleansing strategy, you can establish a strong foundation for data-driven insights and informed decision-making. This proactive approach empowers organizations to identify and address data quality challenges before they impact business outcomes, ensuring accurate, reliable, and trustworthy data for all operations.

7. Text Mining and Natural Language Processing (NLP) Cleansing

Text mining and Natural Language Processing (NLP) cleansing represent specialized data cleansing techniques for unstructured text data. These techniques prepare text for analysis and machine learning by removing noise, standardizing formats, and extracting meaningful information. This process leverages computational linguistics and NLP algorithms to transform raw text into a structured and analyzable format.

NLP cleansing involves various steps, including removing irrelevant characters, handling white spaces, converting text to lowercase, stemming or lemmatizing words, and removing stop words. These steps help to reduce data dimensionality and improve the accuracy of subsequent analysis. Furthermore, techniques like named entity recognition and part-of-speech tagging can extract valuable insights from text.

Examples of Text Mining and NLP Cleansing

- Social Media Platforms: Cleaning user-generated content for sentiment analysis, enabling businesses to understand public opinion about their brands.

- Legal Firms: Processing contract documents for clause extraction, automating legal research and due diligence.

- Customer Service Systems: Cleaning chat logs for quality analysis, identifying areas for improvement in customer interactions.

- Academic Institutions: Processing research papers for literature reviews, facilitating efficient research and knowledge discovery.

Actionable Tips for Effective Text Mining and NLP Cleansing

- Preserve Original Text: Maintain a copy of the original text alongside the cleaned version for reference and auditing.

- Domain-Specific Dictionaries: Utilize dictionaries and rules tailored to your specific domain for more accurate processing.

- Multi-Stage Pipelines: Implement multi-stage preprocessing pipelines for handling different aspects of text cleaning sequentially.

- Contextual Awareness: Consider cultural and linguistic context when defining processing rules to avoid misinterpretations.

When and Why to Use Text Mining and NLP Cleansing

Text mining and NLP cleansing are essential when dealing with large volumes of unstructured text data. These techniques are particularly valuable when:

- Analyzing customer feedback: Extracting insights from reviews and surveys to understand customer sentiment.

- Automating document processing: Streamlining workflows by automatically extracting key information from contracts, reports, and other documents.

- Improving search functionality: Enhancing search relevance by cleaning and standardizing text data.

- Developing machine learning models: Preparing text data for training machine learning models for tasks like text classification and sentiment analysis.

By effectively utilizing text mining and NLP cleansing techniques, organizations can unlock valuable insights hidden within unstructured text data, leading to data-driven decision-making and improved business outcomes. This makes these techniques an indispensable part of a comprehensive data cleansing strategy in today's data-rich environment.

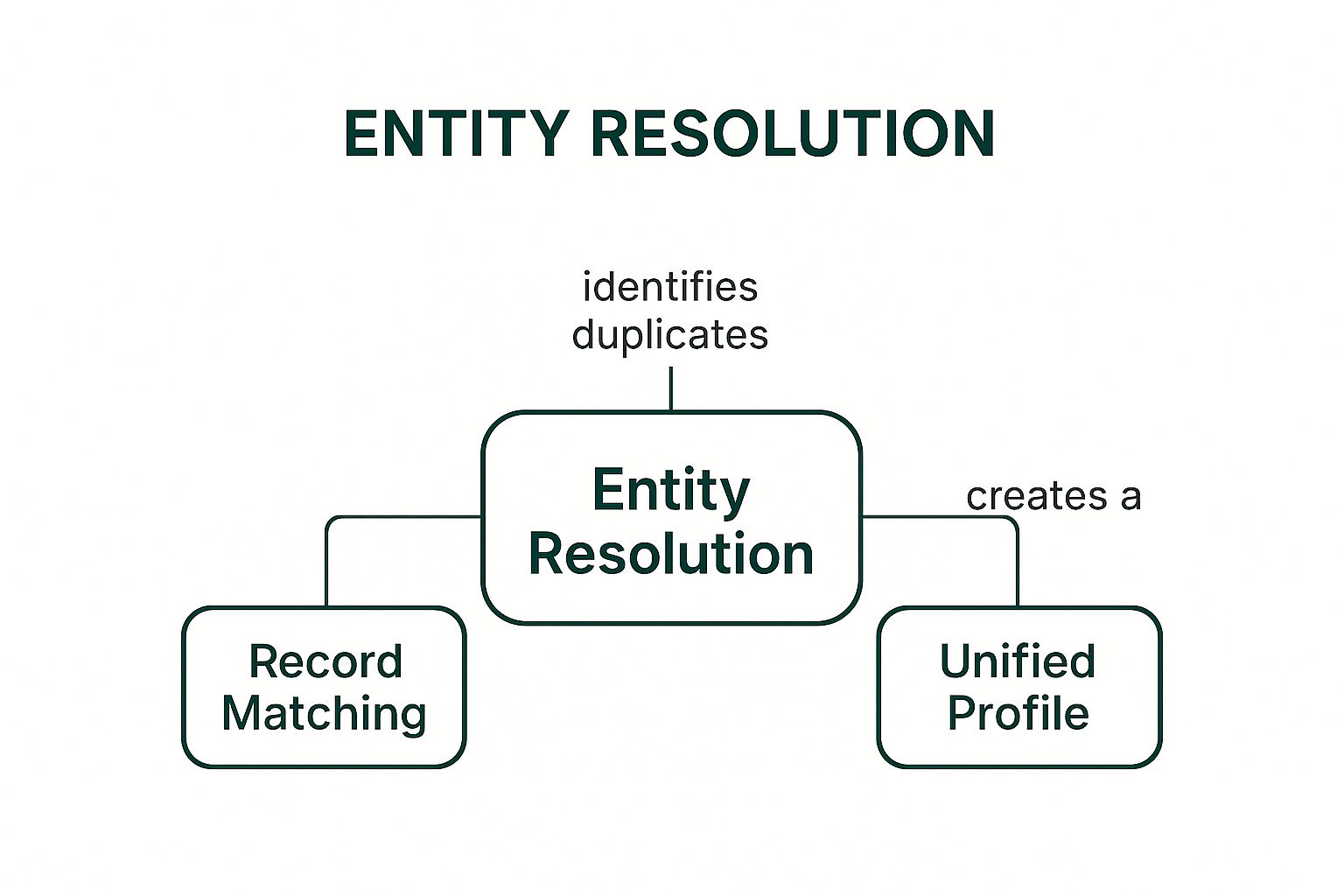

8. Record Linkage and Entity Resolution

Record linkage and entity resolution is a sophisticated data cleansing technique used to identify and connect records representing the same real-world entity, even when those records reside in different datasets or within the same dataset but contain inconsistencies. This process leverages probabilistic matching, machine learning, and graph-based algorithms to resolve entity identities despite variations in data representation, such as spelling errors, nicknames, or different address formats. This technique is crucial for achieving a unified and accurate view of entities, enabling better decision-making and analysis.

The infographic visualizes the core components of entity resolution: record matching leading to a unified profile. The visual emphasizes the connection between identifying matching records and the ultimate goal of creating a single, consistent view of each entity.

Examples of Record Linkage and Entity Resolution

- Healthcare Systems: Linking patient records across different hospitals improves patient care and reduces medical errors by providing a complete view of a patient's medical history.

- Financial Institutions: Identifying related accounts held by the same individual is vital for Anti-Money Laundering (AML) compliance and fraud detection.

- Government Agencies: Matching citizen records across different departments streamlines services and improves efficiency by avoiding redundant data entry and ensuring consistent information.

- E-commerce Platforms: Linking customer profiles across different touchpoints, such as website, mobile app, and social media, enhances personalized marketing and customer service.

Actionable Tips for Effective Record Linkage

- Hierarchical Blocking: Improve scalability by using hierarchical blocking strategies to narrow down the comparison scope, reducing computational complexity.

- Confidence Scoring: Implement confidence scoring to assess the quality of matches and prioritize high-confidence matches for further review or automated processing.

- Multiple Similarity Measures: Combine multiple similarity measures, such as string similarity and phonetic matching, to achieve robust matching across different data variations.

- Governance Processes: Establish clear governance processes for entity resolution decisions to ensure consistent and accurate results, especially when dealing with sensitive data.

When and Why to Use Record Linkage and Entity Resolution

This data cleansing technique is essential when dealing with multiple datasets or a single dataset with inconsistent entity representation. It's particularly valuable when:

- Creating a unified view of customers or entities: Provides a 360-degree view for improved analysis and decision-making.

- Improving data accuracy for reporting and analysis: Ensures data integrity by resolving inconsistencies and linking related records.

- Enhancing the efficiency of data-driven processes: Reduces manual effort in identifying and merging related records.

- Complying with regulatory requirements: Enables accurate identification and linking of entities for compliance purposes.

Record linkage and entity resolution is a powerful data cleansing technique that empowers organizations to gain a more comprehensive and accurate understanding of their data, leading to improved operational efficiency, better decision-making, and enhanced compliance.

Data Cleansing Techniques: Key Feature Comparison

| Technique | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Duplicate Detection and Removal | Medium to high; requires tuning similarity thresholds | High computational cost for large datasets | Cleaner datasets with fewer redundancies | Customer data cleanup, product catalog deduplication | Improves data quality and reduces storage costs |

| Data Validation and Constraint Checking | Low to medium; rule definition and maintenance required | Moderate; depends on rule engine complexity | High data integrity and reduced invalid inputs | Financial transactions, compliance data input | Prevents invalid data and maintains consistency |

| Missing Value Imputation | Medium to high; advanced methods can be complex | Moderate to high; depends on method chosen | Complete datasets suitable for analysis | Clinical trials, survey data, sensor data | Preserves sample size and improves model quality |

| Outlier Detection and Treatment | Medium; method selection essential | Moderate; depends on algorithms used | Enhanced model accuracy and error identification | Fraud detection, quality control, anomaly detection | Identifies errors and unusual events effectively |

| Data Standardization and Normalization | Medium; requires comprehensive rule definition | Moderate to high; especially for large data | Uniform data enabling integration and comparison | Cross-system data integration, reporting | Facilitates accurate comparisons and ML training |

| Data Profiling and Quality Assessment | Medium to high; requires skilled analysis | High; intensive computation and analysis | Detailed insights into data quality and issues | Data governance, preliminary data analysis | Helps prioritize cleansing and improve quality |

| Text Mining and NLP Cleansing | High; complex preprocessing pipelines | High computational resources | Cleaned text ready for analysis and modeling | Social media, legal documents, customer feedback | Enables processing of unstructured textual data |

| Record Linkage and Entity Resolution | Very high; involves probabilistic and ML approaches | Very high; computationally intensive | Unified entity views across datasets | Master data management, fraud detection | Enables comprehensive entity matching |

Data Cleansing Mastery: Empowering Your Business with Enhanced Data Quality

This article has explored eight crucial data cleansing techniques, providing practical, step-by-step examples using Excel and AIForExcel. From identifying and removing duplicates to leveraging the power of text mining and NLP, each technique contributes to building a robust data foundation. Mastering these techniques is no longer a luxury but a necessity for any organization aiming to thrive in today's data-driven world.

The Value of Clean Data

Clean data is the cornerstone of accurate reporting, effective decision-making, and insightful analysis. By implementing the strategies outlined in this article, you can significantly improve data quality, leading to:

- Better Business Decisions: Clean data provides a clear and accurate view of your business operations, enabling informed, data-backed decisions.

- Increased Efficiency: Streamlined data processes reduce time wasted on manual cleaning and error correction, boosting overall productivity.

- Competitive Advantage: Leveraging the full potential of your data unlocks valuable insights, giving you a competitive edge in the market.

- Improved Forecasting and Planning: Accurate data enables more reliable forecasting and planning, leading to better resource allocation and strategic development.

Implementing Data Cleansing Best Practices

Remember, data cleansing is not a one-time task. It's an ongoing process that requires consistent effort and the right tools. Regularly implementing these techniques will ensure your data remains accurate, reliable, and ready to drive impactful results.

- Prioritize Data Quality from the Start: Implement data quality checks at every stage of data entry and collection.

- Automate Where Possible: Leverage tools and technologies to automate repetitive cleansing tasks, saving time and resources.

- Document Your Processes: Create clear documentation of your data cleansing procedures for consistency and future reference.

Taking Your Data Cleansing to the Next Level with AI

While traditional Excel methods can be effective, incorporating AI-powered tools can significantly enhance your data cleansing efforts. Tools like AIForExcel empower users to perform complex data manipulation and analysis within the familiar Excel environment, streamlining the entire process. This integration of AI with existing workflows accelerates data cleansing, making it more efficient and accessible to users of all skill levels.

Empowering Your Future with Clean Data

By embracing these data cleansing techniques and incorporating intelligent tools, you are investing in the future of your organization. Clean, reliable data empowers your business to make better decisions, optimize operations, and unlock its full potential. Take action today and transform your data into a powerful asset.

Want to streamline your data cleansing efforts within Excel? Explore the power of AIForExcel and discover how easy it is to transform your data into actionable insights. Visit AIForExcel to learn more and start your free trial today.