Transforming Messy Data into Actionable Insights

Clean data is the foundation of accurate analysis and informed decision-making. Inaccurate data leads to flawed insights, hindering your ability to make sound business decisions. This listicle provides ten essential data cleaning techniques to empower you to transform raw, messy data into a reliable source of actionable insights. You'll learn practical applications, including Excel implementations using AIForExcel, for each technique.

These techniques are crucial for anyone working with data, from creating reports and dashboards to building complex financial models. Whether you're a seasoned analyst or just starting out, mastering data cleaning is essential for success. This listicle offers specific, actionable guidance, moving beyond general advice to provide real-world applications.

We'll cover the following key data cleaning techniques:

- Data Deduplication

- Missing Data Imputation

- Outlier Detection and Treatment

- Data Type Conversion and Standardization

- Data Validation and Constraint Checking

- Text Data Cleaning and Normalization

- Data Profiling and Quality Assessment

- Data Transformation and Normalization

- Error Detection and Correction

- Data Integration and Consolidation

By mastering these data cleaning techniques, you will:

- Improve the accuracy of your reports and dashboards.

- Make better informed business decisions.

- Increase the reliability of your data analysis.

- Save time and resources by streamlining your data cleaning process.

This listicle provides a deep dive into each technique, explaining what it is, when to use it, and how to implement it in Excel, including examples with AIForExcel. Get ready to unlock the true potential of your data.

1. Data Deduplication

Data deduplication is a crucial data cleaning technique that identifies and removes duplicate records from your datasets. Maintaining clean data is essential for accurate analysis and reporting. Deduplication helps achieve this by eliminating redundant information, which improves data integrity and reduces storage costs. This process involves comparing records across multiple attributes to find exact or near-duplicate matches. Imagine a customer database with multiple entries for the same person - deduplication streamlines this information into a single, accurate record.

Examples of Data Deduplication in Action

Deduplication is valuable across various domains:

- Customer Relationship Management (CRM): Removing duplicate customer entries ensures accurate sales tracking and targeted marketing.

- Email Marketing: Deduplicating email lists avoids sending multiple messages to the same recipient, improving campaign effectiveness and reducing spam complaints.

- E-commerce: Maintaining a clean product catalog with accurate product information enhances the customer experience and streamlines inventory management.

Actionable Deduplication Tips

Implementing effective data deduplication requires a strategic approach:

- Multiple Matching Criteria: Don't rely solely on one attribute. Use a combination of factors (e.g., name, email, address) for accurate identification.

- Staging Area: Before permanently deleting duplicates, review them in a staging area to prevent accidental removal of legitimate data.

- Probabilistic Matching: For near duplicates, consider probabilistic matching algorithms that identify records with slight variations.

When and Why Use Data Deduplication?

Deduplication is particularly beneficial when:

- Data Integrity is Compromised: Duplicate records can lead to inconsistencies and inaccuracies in analysis and reporting. Deduplication restores data integrity.

- Storage Costs are High: Redundant data consumes unnecessary storage space. Deduplication helps optimize storage utilization and reduces costs.

- Processing Time is Slow: Large datasets with duplicates can slow down processing times. Removing duplicates improves efficiency.

The following video offers a practical demonstration of deduplication techniques in Excel:

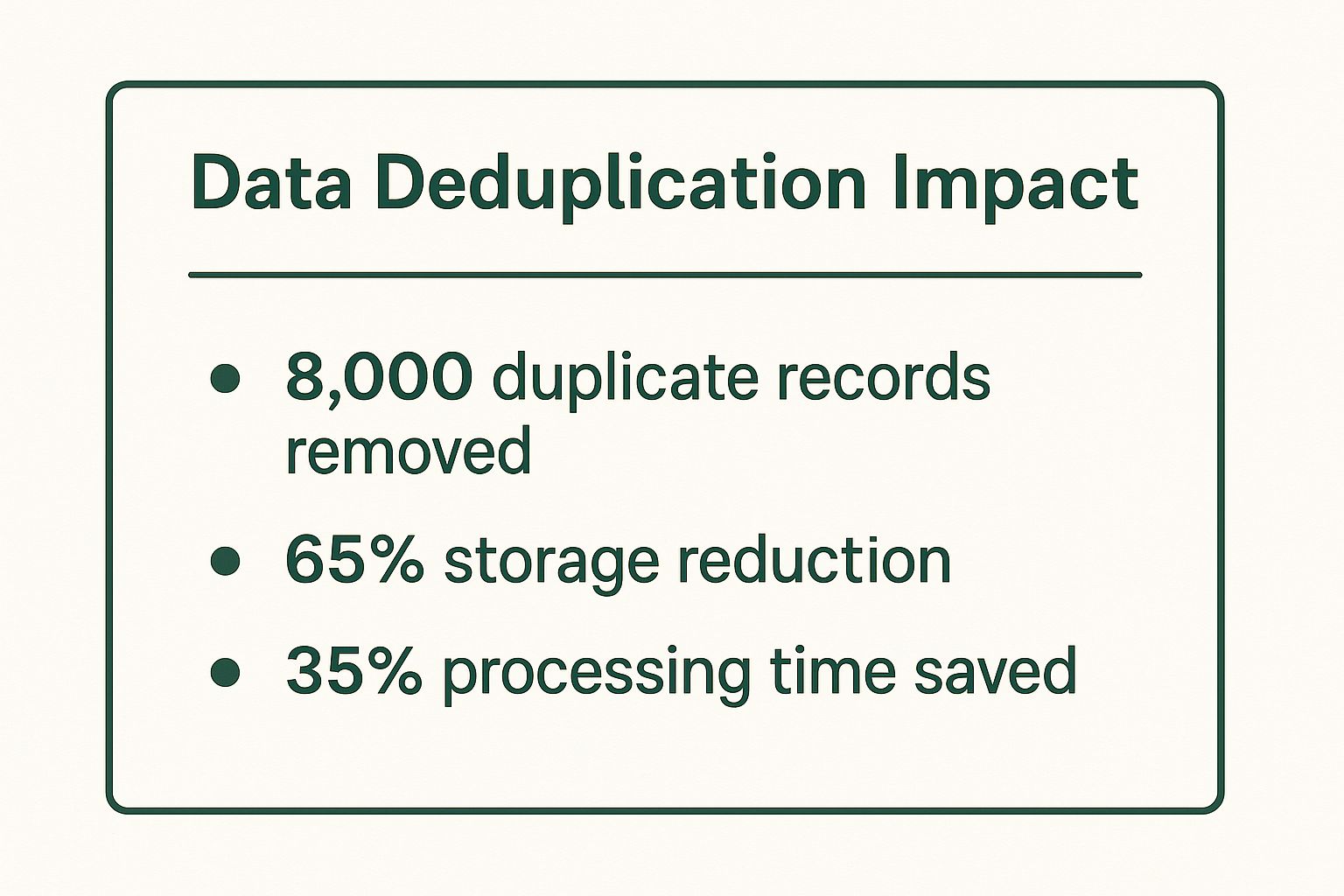

The infographic below, "Data Deduplication Impact," visualizes the potential benefits of deduplication by highlighting the number of duplicate records removed, percentage storage reduction, and processing time saved.

As the infographic demonstrates, data deduplication can significantly reduce storage needs and improve processing efficiency, showcasing its value as a core data cleaning technique. These improvements translate to cost savings and faster data analysis. Maintaining a clean and accurate dataset through deduplication is essential for making informed business decisions.

2. Missing Data Imputation

Missing data imputation is a critical data cleaning technique that addresses gaps or missing values within datasets. These missing values can arise from various sources, such as survey non-responses, equipment malfunctions, or incomplete records. Imputation involves filling these gaps using statistical methods, machine learning algorithms, or domain-specific knowledge. This process prevents data loss, ensuring that datasets remain complete and suitable for accurate analysis and modeling.

Examples of Missing Data Imputation in Action

Missing data imputation finds practical applications across diverse fields:

- Survey Research: Imputing missing responses in surveys allows researchers to utilize the maximum available data for analysis.

- Healthcare: In clinical trials, imputation can address missing patient data due to dropouts, preserving the study's statistical power.

- Finance: Imputing missing financial data ensures accurate reporting and forecasting.

Actionable Missing Data Imputation Tips

Effective imputation requires careful consideration and planning:

- Understand the Missing Data Mechanism: Determine if the data is missing completely at random, missing at random, or missing not at random, as this informs the appropriate imputation method.

- Multiple Imputation: Generate multiple imputed datasets to account for uncertainty associated with imputation, providing more robust results.

- Validation: Evaluate the quality of the imputed data using a holdout dataset to ensure the chosen method maintains data integrity.

When and Why Use Missing Data Imputation?

Imputation becomes especially valuable when:

- Missing Data Impacts Analysis: Missing values can skew statistical analysis and lead to inaccurate conclusions. Imputation preserves the data's usefulness.

- Complete Datasets are Required: Certain analytical methods require complete datasets. Imputation ensures data compatibility for these techniques.

- Data Loss is Unacceptable: When missing data represents valuable information, imputation prevents discarding potentially critical insights.

Missing data imputation is essential for maximizing data utility and producing reliable analytical results. By carefully selecting and implementing imputation methods, analysts can preserve the integrity and completeness of their datasets, enabling more accurate insights and informed decision-making.

3. Outlier Detection and Treatment

Outlier detection and treatment is a crucial data cleaning technique that identifies and handles data points significantly deviating from the expected pattern or distribution. These anomalous values can arise from errors in data entry, measurement inaccuracies, or genuine extreme events. Identifying and treating these outliers is essential for robust data analysis and reliable insights. Accurate outlier detection helps maintain data integrity and ensures the validity of statistical analyses.

Examples of Outlier Detection and Treatment in Action

This technique finds application across diverse fields:

- Fraud Detection (Banking): Identifying unusual transaction patterns can help detect fraudulent activities.

- Quality Control (Manufacturing): Detecting products with measurements outside acceptable tolerances ensures product quality.

- Medical Diagnosis: Outliers in patient vital signs or test results might indicate critical health conditions.

Actionable Outlier Detection and Treatment Tips

Effective outlier handling involves a nuanced approach:

- Domain Knowledge: Use your understanding of the data to validate whether an outlier is a genuine anomaly or an error.

- Multiple Methods: Apply several outlier detection methods (e.g., box plots, z-scores, clustering) to ensure comprehensive identification.

- Visualizations: Visualize data before and after outlier treatment to assess the impact of the adjustments.

When and Why Use Outlier Detection and Treatment?

Outlier detection is particularly important when:

- Data Accuracy is Critical: Outliers can skew statistical analyses and lead to inaccurate conclusions. Treatment helps ensure reliable results.

- Model Performance is Affected: Outliers can negatively impact the performance of machine learning models. Removing or transforming them improves model accuracy.

- Decision-Making Relies on Data: Outliers can distort data-driven decisions. Proper treatment supports informed and effective decision making.

Outlier detection and treatment enhances data quality, leading to more accurate analyses, improved model performance, and ultimately, better decision making. Data cleaning through outlier analysis helps identify and address data anomalies for more robust and reliable outcomes.

4. Data Type Conversion and Standardization

Data type conversion and standardization is a fundamental data cleaning technique that ensures data fields have consistent and appropriate data types, formats, and structures. This process is essential for accurate data analysis, reporting, and integration with other systems. Imagine importing a CSV file where numbers are stored as text - converting these strings to numerical data types allows for proper calculations and analysis. Similarly, standardizing date formats eliminates ambiguities and ensures consistent sorting and filtering.

Examples of Data Type Conversion and Standardization in Action

This technique is invaluable in various contexts:

- Converting CSV Imports: Transforming imported data into the correct data types (e.g., strings to numbers, dates to date/time format) enables meaningful analysis within databases or spreadsheets.

- Standardizing Date Formats: Ensuring dates are consistently formatted (e.g., YYYY-MM-DD) across different systems prevents errors and allows for accurate date comparisons.

- Encoding Categorical Variables: Converting categorical data (e.g., "red," "blue," "green") into numerical representations prepares data for machine learning algorithms.

Actionable Data Type Conversion and Standardization Tips

Follow these tips for effective implementation:

- Validate Conversions: After converting data types, always validate the results with a sample dataset to ensure accuracy and identify potential errors.

- Preserve Original Values: If possible, retain the original values in a separate field while performing conversions, allowing you to revert to the original format if needed.

- Handle Edge Cases: Consider and address potential edge cases, such as missing values or unexpected data formats, to prevent errors during conversion.

When and Why Use Data Type Conversion and Standardization?

This technique is crucial when:

- Data Integration is Required: Standardizing data types and formats facilitates seamless integration of data from different sources.

- Data Analysis is Inaccurate: Inconsistent data types can lead to incorrect calculations and skewed analytical results. Conversion ensures accurate analysis.

- Machine Learning Models are Used: Converting categorical variables into numerical representations is essential for many machine learning algorithms.

Data type conversion and standardization ensures data consistency and accuracy, which are vital for reliable data analysis, effective reporting, and seamless data integration. This process lays the foundation for sound decision-making based on dependable data. By transforming data into its correct format, we unlock its full potential for generating valuable insights.

5. Data Validation and Constraint Checking

Data validation and constraint checking are essential data cleaning techniques that ensure your data adheres to predefined rules and quality standards. This process involves verifying data against specific criteria, such as valid ranges, formats, relationships, and business logic, ensuring data integrity and reliability for accurate analysis. Imagine a dataset where customer ages are negative or email addresses are missing the "@" symbol—data validation corrects these inconsistencies.

Examples of Data Validation and Constraint Checking in Action

This technique is invaluable across various domains:

- Email Marketing: Validating email addresses for proper format prevents bounces and improves deliverability.

- Financial Modeling: Checking numerical data within defined ranges prevents illogical calculations and ensures accurate financial projections.

- E-commerce: Product code verification prevents incorrect product listings and streamlines inventory management. Geographic coordinate validation for shipping addresses can also improve delivery accuracy.

- Healthcare: Age range checking in patient demographics ensures data accuracy for clinical trials and research. Credit score validation can be used for patient financing options.

Actionable Data Validation and Constraint Checking Tips

Effective data validation requires a proactive approach:

- Collaborative Rule Definition: Work with business users to define accurate validation rules that reflect real-world requirements.

- Progressive Validation Levels: Implement validation at different stages, from initial data entry to final reporting, to catch errors early.

- Clear Error Messages: Provide informative error messages that guide users towards correcting invalid data.

- Log Validation Failures: Track and analyze validation failures to identify recurring data quality issues and improve data collection processes.

- Regular Review and Update: Business rules and data constraints change over time. Review and update your validation rules periodically to maintain their effectiveness.

When and Why Use Data Validation and Constraint Checking?

Data validation is particularly crucial when:

- Data Accuracy is Paramount: Inaccurate data can lead to flawed decisions and costly mistakes. Validation ensures reliable and trustworthy data for analysis.

- Compliance is Mandatory: Certain industries require data to adhere to strict regulations. Validation helps meet these compliance requirements.

- Data Integration is Necessary: Combining data from different sources requires validation to ensure consistency and prevent integration errors.

Learn more about Data Validation and Constraint Checking in Excel at this helpful resource. Applying these techniques strengthens data integrity, contributing significantly to improved decision-making and overall business efficiency.

6. Text Data Cleaning and Normalization

Text data cleaning and normalization are essential data cleaning techniques specifically designed for unstructured text data. These techniques transform raw text into a consistent and usable format for analysis and modeling. This process involves removing unwanted characters, standardizing case and formatting, handling encoding issues, and potentially extracting meaningful information from free-text fields. Think of it as refining raw ore into usable metal - it's crucial for getting the most value from your data.

Examples of Text Data Cleaning and Normalization in Action

Text data cleaning is crucial in various applications:

- Social Media Sentiment Analysis: Cleaning tweets or comments by removing hashtags, mentions, and special characters allows for accurate sentiment analysis.

- Customer Feedback Processing: Normalizing customer reviews by standardizing abbreviations and slang helps identify key themes and trends.

- Search Engine Optimization (SEO): Cleaning website content by removing unnecessary HTML tags and standardizing keywords improves search engine ranking.

Actionable Text Cleaning and Normalization Tips

Here are some practical tips for effective text data cleaning:

- Preserve Original Text: Always create a backup copy of your original text data before applying any cleaning operations.

- Language-Specific Tools: Utilize language-specific libraries and tools (like NLTK for Python) for tasks like stemming and lemmatization.

- Contextual Stop Word Removal: Carefully consider the context before removing stop words, as they can sometimes carry valuable information.

When and Why Use Text Data Cleaning and Normalization?

Text data cleaning and normalization are particularly important when:

- Dealing with Unstructured Data: Raw text data is often messy and inconsistent. Cleaning ensures data usability for analysis.

- Improving Model Accuracy: Cleaned and normalized text improves the accuracy of machine learning models trained on text data.

- Enabling Meaningful Analysis: Standardized text enables more accurate analysis of trends, patterns, and insights.

Data cleaning techniques, including text data cleaning and normalization, are essential for extracting meaningful insights from text data. These techniques ensure data accuracy and reliability, which are crucial for informed decision-making.

7. Data Profiling and Quality Assessment

Data profiling and quality assessment is a crucial data cleaning technique that systematically examines data to understand its structure, content, quality, and inherent relationships. This process involves statistical analysis, pattern detection, and the calculation of key quality metrics to provide valuable insights into data characteristics. Think of it as a comprehensive health check for your data, identifying potential issues before they impact analysis and decision-making. Maintaining clean data is essential for accurate analysis and reporting, and data profiling helps identify areas for improvement.

Examples of Data Profiling and Quality Assessment in Action

Data profiling is a valuable tool across numerous applications:

- Data Warehouse Quality Assessment: Ensuring the accuracy and consistency of data within a data warehouse is critical for reliable business intelligence.

- Migration Project Analysis: Profiling source data before migration helps identify potential data quality issues and plan for necessary transformations.

- Regulatory Compliance Reporting: Data profiling helps organizations meet regulatory requirements by ensuring data accuracy and completeness.

Actionable Data Profiling Tips

Effective data profiling requires a strategic approach:

- Profile Data Regularly: Don't just profile data once. Regular profiling helps identify emerging data quality issues.

- Focus on Business-Critical Fields: Prioritize profiling fields that directly impact critical business decisions.

- Use Visualization: Communicate profiling findings effectively through charts and graphs.

When and Why Use Data Profiling and Quality Assessment?

Data profiling is particularly beneficial when:

- Data Quality is Unknown: When working with new or unfamiliar datasets, profiling provides a baseline understanding of data characteristics.

- Data Migration is Planned: Profiling helps identify potential data quality issues before migrating data to a new system.

- Compliance is Required: Data profiling supports regulatory compliance by ensuring data accuracy and completeness.

Learn more about Data Profiling and Quality Assessment at https://ai-for-excel.com/blog/free-data-analysis-tools. Utilizing free data analysis tools can enhance the profiling process. By understanding your data through profiling, you lay the groundwork for effective data cleaning and informed decision-making. Data profiling contributes significantly to the overall data cleaning process by identifying areas requiring attention and ultimately leading to more accurate and reliable insights.

8. Data Transformation and Normalization

Data transformation and normalization are crucial data cleaning techniques that convert data from one format, structure, or scale to another, making it suitable for analysis or integration. Clean and consistent data is essential for accurate analysis, and transformation ensures data compatibility and improves model performance. This process involves techniques like scaling numerical values, encoding categorical variables, and restructuring data layouts to enhance data usability and interpretation. Imagine analyzing sales data with prices in different currencies - transformation standardizes this information for meaningful comparison.

Examples of Data Transformation and Normalization in Action

Data transformation and normalization are valuable across many fields:

- Machine Learning: Feature scaling ensures that all features contribute equally to model training, preventing bias towards features with larger values.

- Database Normalization: Restructuring database tables reduces redundancy and improves data integrity, leading to efficient storage and retrieval.

- Financial Analysis: Transforming financial data into ratios and percentages facilitates comparisons across different companies and time periods.

Actionable Transformation and Normalization Tips

Implementing effective data transformation and normalization requires careful planning:

- Understand Data Distribution: Before applying transformations, analyze the data distribution to determine the most appropriate scaling method.

- Document Transformation Parameters: Keep track of the transformation parameters used (e.g., scaling factors, encoding schemes) for reverse operations or future reference.

- Validate Transformed Data Quality: After transformation, validate the data to ensure its accuracy and consistency. Check for any unexpected changes or errors.

When and Why Use Data Transformation and Normalization?

Data transformation and normalization are particularly beneficial when:

- Data is on Different Scales: Transforming data to a common scale prevents features with larger values from dominating analysis or model training. Learn more about Data Transformation and Normalization and its applications in Excel.

- Data Has Outliers: Normalization techniques can help mitigate the impact of outliers on analysis and model performance.

- Integrating Data from Different Sources: Transformation ensures compatibility when combining data from various sources with different formats or structures.

Data transformation and normalization significantly enhance data quality and usability, enabling more accurate analysis, better model performance, and improved decision-making. Maintaining a consistent and standardized dataset through these techniques is essential for deriving meaningful insights and achieving business objectives.

9. Error Detection and Correction

Error detection and correction is a vital data cleaning technique that systematically identifies and rectifies errors within datasets. These errors can range from simple typos and inconsistencies to more complex logical errors. Maintaining accurate data is paramount for reliable analysis and decision-making. This process combines automated detection algorithms with manual review to ensure data accuracy and integrity. Imagine a product catalog with inconsistent pricing or a financial report with incorrect calculations - error detection and correction helps resolve these issues.

Examples of Error Detection and Correction in Action

Error detection and correction finds applications across diverse fields:

- Address Standardization Systems: Correcting misspellings and inconsistencies in addresses ensures accurate delivery and efficient logistics.

- Product Catalog Cleanup: Identifying and rectifying inaccurate product descriptions or pricing information improves customer experience and sales.

- Financial Transaction Validation: Detecting and correcting errors in financial transactions prevents accounting discrepancies and ensures regulatory compliance.

Actionable Error Detection and Correction Tips

Effective error detection and correction requires a structured approach:

- Combine Multiple Detection Methods: Implement a variety of methods such as data quality rules, statistical analysis, and machine learning algorithms for comprehensive error identification.

- Prioritize Errors by Business Impact: Focus on correcting errors that have the most significant impact on business operations and decision-making.

- Maintain Error Correction Logs: Documenting corrected errors allows for tracking progress and identifying recurring error patterns.

When and Why Use Error Detection and Correction?

Error detection and correction is especially valuable when:

- Data Quality is Questionable: Suspected errors or inconsistencies in the data require a systematic approach to identification and correction. This process ensures data accuracy for reliable analysis.

- Compliance Requirements Exist: Regulatory compliance often mandates data accuracy. Error detection and correction helps meet these requirements.

- Decision-Making Relies on Data Integrity: Accurate data is essential for informed decision-making. Error detection and correction helps mitigate the risk of flawed decisions based on inaccurate data.

Implementing robust error detection and correction procedures is crucial for maintaining data quality and ensuring the reliability of data-driven insights. This technique plays a key role in enabling informed business decisions and operational efficiency.

10. Data Integration and Consolidation

Data integration and consolidation is a crucial data cleaning technique that combines data from multiple sources into a unified, consistent dataset. Maintaining a single source of truth is essential for accurate analysis and informed decision-making. This process involves resolving discrepancies in data schemas, handling conflicting information, and ensuring data consistency across various systems and formats. Think of it as assembling a jigsaw puzzle where each piece represents data from a different source - integration and consolidation create the complete picture.

Examples of Data Integration and Consolidation in Action

Data integration and consolidation proves invaluable in various contexts:

- Customer 360 Initiatives: Combining customer data from sales, marketing, and service departments provides a holistic view of each customer.

- Data Warehouse Construction: Integrating data from transactional systems into a central repository enables comprehensive business intelligence and reporting.

- Master Data Management: Creating a single, authoritative source for key business entities (e.g., customers, products) ensures data consistency across the organization.

Actionable Data Integration and Consolidation Tips

Effective data integration and consolidation requires a structured approach:

- Map Data Lineage: Carefully track the origin and transformations of data to ensure transparency and traceability.

- Establish Clear Conflict Resolution Rules: Define how to handle conflicting data values from different sources, prioritizing accuracy and reliability.

- Incremental Integration: Implement incremental data updates to minimize disruption and maintain system performance.

When and Why Use Data Integration and Consolidation?

Data integration and consolidation is particularly beneficial when:

- Data Silos Exist: Data scattered across multiple systems hinders comprehensive analysis. Integration breaks down these silos.

- Reporting is Inefficient: Gathering data from various sources is time-consuming. Consolidation streamlines reporting processes.

- Decision-Making is Impaired: Inconsistent data leads to inaccurate insights. Integration provides a reliable foundation for decision-making.

Data integration and consolidation is essential for organizations seeking to leverage the full potential of their data. By unifying disparate data sources, this technique empowers businesses with a clear, consistent, and comprehensive view of their operations, customers, and market landscape. This, in turn, enables more informed decision-making and drives better business outcomes.

Data Cleaning Techniques Comparison Matrix

| Technique | Implementation Complexity 🔄 | Resource Requirements ⚡ | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Data Deduplication | Medium to High (fuzzy matching adds complexity) 🔄🔄 | Moderate to High (computationally expensive for large sets) ⚡ | Reduced storage, improved data quality, accurate analysis 📊 | CRMs, marketing lists, product catalogs, financial/medical records 💡 | Reduces storage, improves quality, prevents skewed data ⭐ |

| Missing Data Imputation | Medium (statistical/machine learning methods) 🔄 | Moderate (varies by technique) ⚡ | Preserved dataset size & completeness, reduces bias 📊 | Surveys, sensor failures, clinical trials, financial/web data gaps 💡 | Maintains dataset size, reduces bias, flexible approaches ⭐ |

| Outlier Detection & Treatment | Medium to High (statistical + ML methods) 🔄🔄 | Moderate to High (depending on dataset size) ⚡ | Improved model accuracy, error detection, anomaly exposure 📊 | Fraud detection, quality control, medical, security, finance 💡 | Enhances reliability, identifies errors & patterns ⭐ |

| Data Type Conversion & Standardization | Low to Medium (mostly rule-based) 🔄 | Low to Moderate ⚡ | Consistent formats & types, fewer errors, better integration 📊 | Database imports, date/currency formatting, encoding for ML 💡 | Ensures consistency, prevents errors, improves performance ⭐ |

| Data Validation & Constraint Checking | Medium (rule definitions needed) 🔄 | Low to Moderate ⚡ | High data quality at source, compliance, early error detection 📊 | Business rules enforcement, format/range checks, regulatory compliance 💡 | Prevents errors, ensures compliance, detects early issues ⭐ |

| Text Data Cleaning & Normalization | Medium (language-dependent, computationally intensive) 🔄 | Moderate to High (depending on text volume) ⚡ | Improved text analysis, standardized representation 📊 | Social media, feedback, document management, SEO, legal texts 💡 | Standardizes text, reduces dimensionality, improves analysis ⭐ |

| Data Profiling & Quality Assessment | Medium (requires skilled interpretation) 🔄 | Moderate to High (large datasets can be demanding) ⚡ | Comprehensive data understanding, early quality issue detection 📊 | Data warehouses, migrations, compliance, master data, analytics prep 💡 | Guides cleaning, supports governance, informed decisions ⭐ |

| Data Transformation & Normalization | Medium (varied methods, parameter tuning) 🔄 | Moderate ⚡ | Better algorithm performance, standardized scales 📊 | ML feature scaling, ETL, database normalization, financial/survey data 💡 | Improves performance, reduces bias, enables integration ⭐ |

| Error Detection & Correction | Medium (pattern and ML-based methods) 🔄 | Moderate ⚡ | Higher accuracy, less manual review, error prevention 📊 | Address standardization, catalogs, financial & medical data validation 💡 | Improves accuracy, reduces manual effort, prevents error spread ⭐ |

| Data Integration & Consolidation | High (complex schema and conflict resolution) 🔄🔄🔄 | High (resource intensive and ongoing maintenance) ⚡ | Unified datasets, eliminated silos, better accessibility 📊 | Customer 360, MDM, warehousing, M&A, IoT data aggregation 💡 | Comprehensive data, reduces redundancy, holistic analysis ⭐ |

Achieving Data Clarity: A Foundation for Success

In this comprehensive guide, we've explored ten essential data cleaning techniques, from deduplication and imputation to transformation and consolidation. Mastering these techniques is crucial for anyone working with data, especially in Excel. Clean data is the bedrock of accurate analysis, reliable reporting, and effective decision-making. By implementing the strategies outlined in this article, you're not simply organizing spreadsheets; you're laying the foundation for achieving your business objectives.

Key Takeaways for Data Cleaning Success

Data Quality is Paramount: Remember that the quality of your insights is directly tied to the quality of your data. Garbage in, garbage out, as the saying goes. Data cleaning is not a one-time task but an ongoing process.

Context Matters: The most appropriate data cleaning technique depends on the specific context of your data and your analytical goals. Understanding the nuances of each method is vital.

Embrace Automation: Tools like AIForExcel can significantly streamline the data cleaning process. Leveraging AI can save you time and reduce the risk of human error.

Actionable Next Steps: Putting Theory into Practice

Prioritize Your Data Challenges: Identify the most pressing data quality issues affecting your work. Which areas are causing the most significant roadblocks to accurate analysis?

Start Small, Iterate: Don't feel overwhelmed by the breadth of data cleaning techniques. Begin by focusing on one or two key areas and gradually expand your skillset.

Experiment with AI-Powered Tools: Explore the capabilities of AIForExcel to automate and enhance your data cleaning efforts.

The Transformative Power of Clean Data

Clean data isn't just about neat spreadsheets; it's about unlocking the true potential within your data. Accurate data empowers you to:

Identify Trends and Patterns: Uncover hidden insights that drive better business decisions.

Make Informed Predictions: Forecast future outcomes with greater confidence and accuracy.

Improve Operational Efficiency: Streamline processes and optimize resource allocation.

Gain a Competitive Edge: Leverage data-driven insights to stay ahead of the curve.

By dedicating time and effort to mastering data cleaning techniques, you're investing in the long-term success of your projects and your organization. Clean data fosters clarity, confidence, and ultimately, better outcomes.

Ready to transform your data cleaning process? Supercharge your Excel workflows with AIForExcel, your AI-powered assistant for achieving data clarity. Visit AIForExcel today and experience the difference.